Amazon Bedrock MAP Tagging using the AWS CLI

This guide aims to provide a step-by-step guide to help customers and partners tagging Amazon Bedrock resources for Migration Acceleration Program (MAP) credits using a simple approach with the AWS CLI.

Effective Dec 16 2024, we're expanding MAP to include Amazon Bedrock service and select third-party Large Language Models. This strategic expansion aims to accelerate the adoption of Generative AI technologies among AWS customers, underscoring AWS's commitment to fostering innovation in Generative AI and supporting customers in their advanced technology adoption journey. With this launch, customers can migrate and modernize their AI/ML workloads but also the surrounding workloads like data analytics as part of a complete offering with MAP.

Introducing inference profiles

Inference profiles are a resource of Amazon Bedrock that enable model invocation and cost management.

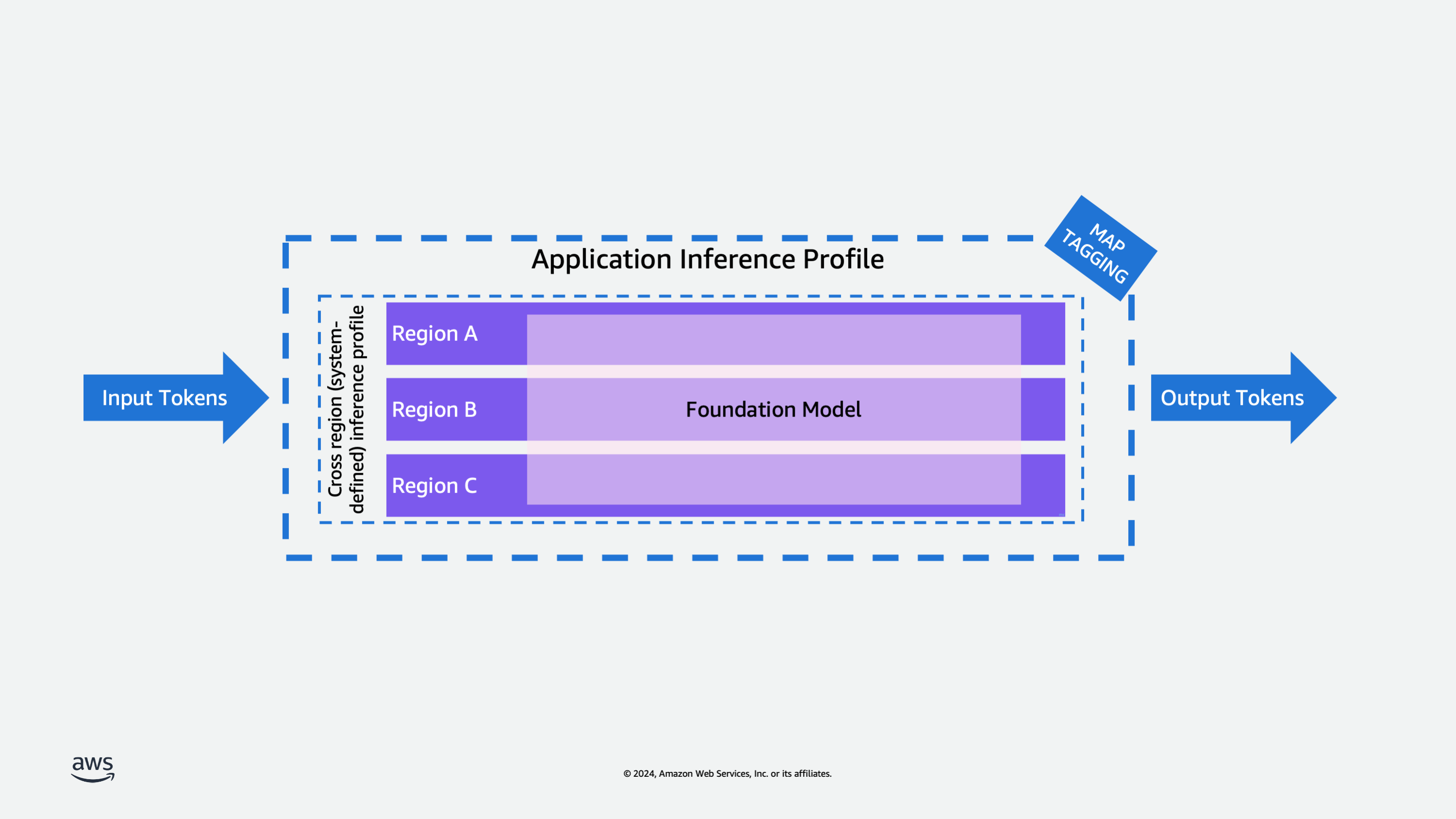

An inference profile serves as a configuration container that specifies both a foundation model and its associated AWS regions for model invocation. Using inference profiles organizations can effectively manage their model invocations across multiple regions, helping to distribute workload and prevent performance bottlenecks. They play a crucial role in cost management and resource tracking, as they can be tagged with cost allocation tags to monitor usage and expenses across different regions.

Amazon Bedrock offers the following types of inference profiles:

Cross-region (system-defined) inference profiles: Inference profiles that are predefined in Amazon Bedrock and include multiple Regions to which requests for a model can be routed.

Application inference profiles: Inference profiles that a user creates to track costs and model usage. You can create an inference profile that routes model invocation requests to one Region or to multiple Regions.

Currently you can only create an inference profile using the Amazon Bedrock API.

A key feature of inference profiles is their integration with cost allocation tags - organizations can apply cost allocation tags to application inference profiles to track and manage expenses. When invoking models through Bedrock, users must specify a profile which contains the region and model specifications. This profile-based approach represents an evolution from the previous direct model ARN invocation method, providing better resource management and cost tracking capabilities. Specifically, application inference profiles support MAP tagging functionality, enabling organizations to track migration progress and resource utilization within the MAP program framework.

Amazon Bedrock offers significant cost advantages for customers with predictable foundation model inference patterns. When used appropriately, provisioned throughput can deliver substantial cost savings compared to on-demand pricing, making it an economical choice for consistent workload volumes. This pricing model is particularly beneficial for customers who can forecast their model inference needs and maintain steady utilization levels. For example, enterprises running regular batch processing jobs, customer service applications with predictable chat volumes, or content generation workflows with consistent throughput requirements can optimize their costs by choosing provisioned capacity. While on-demand mode provides flexibility for variable workloads, provisioned capacity in Bedrock enables customers to optimize their AI/ML spending by committing to a specific throughput level. This approach not only helps reduce operational costs but also ensures reliable performance for applications that require consistent model access, though customers need to carefully plan their capacity requirements to maximize the cost benefits.

Prerequisites

To implement MAP tagging with Bedrock inference profiles, you'll need to follow a structured process that involves creating and tagging application inference profiles. Here's how it works:

-

Must have an active MAP 2.0 Migration plan and Migration Program Engagement (MPE) number accepted after Dec 16 2024

-

Customers must gain access to supported foundation models only by using the Amazon Bedrock Console or API.

-

If using volume-based discounts (such as Provisioned Throughput for supported foundation models), these must be purchased only using the Amazon Bedrock Console or API.

-

Your role must have access to the inference profile API actions. If your role has the AmazonBedrockFullAccess AWS-managed policy attached, you can skip this step. Otherwise, follow the steps at Creating IAM policies and create the following policy, which allows a role to do inference profile-related actions and run model inference using all foundation models and inference profiles.

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "bedrock:InvokeModel*", "bedrock:CreateInferenceProfile" ], "Resource": [ "arn:aws:bedrock:*::foundation-model/*", "arn:aws:bedrock:*:*:inference-profile/*", "arn:aws:bedrock:*:*:application-inference-profile/*" ] }, { "Effect": "Allow", "Action": [ "bedrock:GetInferenceProfile", "bedrock:ListInferenceProfiles", "bedrock:DeleteInferenceProfile", "bedrock:TagResource", "bedrock:UntagResource", "bedrock:ListTagsForResource" ], "Resource": [ "arn:aws:bedrock:*:*:inference-profile/*", "arn:aws:bedrock:*:*:application-inference-profile/*" ] } ] }

Using the CLI

Now create an application inference profile using the AWS CLI. This profile will serve as the foundation for your model invocations and cost tracking. The process typically involves the following steps:

-

Download and install the latest version of the AWS CLI.

-

Create the application inference profile with specific parameters.

-

Create an application inference profile: Open a new terminal on a machine that has the AWS CLI installed and configured and run the following CLI command:

$ aws bedrock create-inference-profile --inference-profile-name "myappinferenceprofile" --modelsource "copyFrom=arn:aws:bedrock:us-east-1:123456789123:inference-profile/us.amazon.nova-pro-v1:0"Enter a new name for the application inference profile and define a model source. The profile is created using a copy of the system inference profile using the “copyFrom” parameter. In this case I am using the Amazon Nova Pro system inference profile using its ARN. The model source is composed using the format:

arn:aws:bedrock:region:accountID:myInferenceProfile/ Inference Profile IDReplace the placeholders for your selected region where the new application inference profile will be created in and add your account ID. Select your preferred system inference profile ID from the list of available system inference profiles. The CLI output shows the created inference profile ARN and status as active.

{ "inferenceProfileArn": "arn:aws:bedrock:us-east-1:123456789123:application-inference-profile/k1c3lwu20lem", "status": "ACTIVE" }The inferenceProfileArn that can be used in other inference profile-related actions and that can be used with model invocation and Amazon Bedrock resources.

-

Once the profile is created, obtain its ARN and use it to apply the “map-migrated” tag to it. This is done using the AWS tagging API.

-

Use the bedrock tag-resource CLI command to tag the created inference profile with the map-migrated tag and the value of your MAP agreement ID following the tagging guidelines.

$ aws bedrock tag-resource --resource-arn "arn:aws:bedrock:us-east-1:123456789123:applicationinference- profile/k1c3lwu20lem" --tags "key=map-migrated,value=mig123456789"For the –resource-arn, Use the generated ARN from previous step. Add the -–tags parameter using the key value pair as shown above replace the value to your corresponding MAP agreement ID with the prefix of “mig”.

-

You can list and update inference profiles and their review their tags. To confirm that the application inference profile is correctly tagged, you can list application profiles using the following CLI command:

$ aws bedrock list-inference-profiles --type-equals "APPLICATION"This will list all application inference profiles showing the ARN, profile name and ID for each.

{ "inferenceProfileSummaries": [ { "inferenceProfileName": "myappinferenceprofile", "createdAt": "2025-01-14T21:21:54.414544+00:00", "updatedAt": "2025-01-14T21:21:54.414544+00:00", "inferenceProfileArn": "arn:aws:bedrock:us-east-1:123456789123:applicationinference-profile/53frgmx3ozbk", "models": [ { "modelArn": "arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-prov1:0" }, { "modelArn": "arn:aws:bedrock:us-west-2::foundation-model/amazon.nova-prov1:0" }, { "modelArn": "arn:aws:bedrock:us-east-2::foundation-model/amazon.nova-prov1:0" } ], "inferenceProfileId": "53frgmx3ozbk", "status": "ACTIVE", "type": "APPLICATION" } ] }If you know the inference Profile ID you can get the specific profile details using the CLI command:

{ "inferenceProfileName": "myappinferenceprofile", "createdAt": "2025-01-14T21:21:54.414544+00:00", "updatedAt": "2025-01-14T21:21:54.414544+00:00", "inferenceProfileArn": "arn:aws:bedrock:us-east-1:123456789123:application-inferenceprofile/53frgmx3ozbk", "models": [ { "modelArn": "arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-pro-v1:0" }, { "modelArn": "arn:aws:bedrock:us-west-2::foundation-model/amazon.nova-pro-v1:0" }, { "modelArn": "arn:aws:bedrock:us-east-2::foundation-model/amazon.nova-pro-v1:0" } ], "inferenceProfileId": "53frgmx3ozbk", "status": "ACTIVE", "type": "APPLICATION" }Using the ARN of the corresponding profile, use the following CLI command to list the resource tags:

$ aws bedrock list-tags-for-resource --resource-arn "arn:aws:bedrock:us-east- 1:123456789123:application-inference-profile/k1c3lwu20lem"The output will list tags associated with the profile.

{ "tags": [ { "key": "map-migrated", "value": "mig123456789" } ] }

This tagging structure enables several important capabilities including proper cost allocation tracking for MAP program requirements, clear identification of migrated workloads, and simplified resource management and monitoring.

The “map-migrated” tag serves as an identifier that helps track your migration progress and ensures proper attribution of resource usage within the MAP program framework. All subsequent model invocations using this tagged profile will be properly tracked and accounted for in your MAP metrics.

To create an application inference profile for one Region, specify a foundation model. Usage and costs for requests made to that Region with that model will be tracked

To create an application inference profile for multiple Regions, specify a cross region (systemdefined) inference profile. The inference profile will route requests to the Regions defined in the cross region (system-defined) inference profile that you choose. Usage and costs for requests made to the Regions in the inference profile will be tracked.